Notes on Resolution

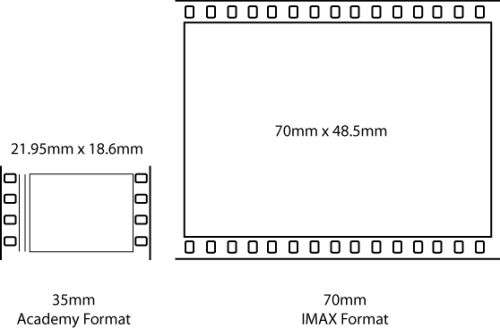

Standard anamorphic film with analog soundtracks visible between the image and perforations on the left. The apparent noise you see on the outside of, and in between the perforations is actually the digital soundtrack.

When the iPhone 4 was first revealed to the world, one of it’s biggest selling points was that it had a fancy new “retina display” which was just a curiously stupid way of saying that it had a very high resolution. When Nikon released the D300, it too had a screen with significantly higher resolution than any digital camera at the time and while that feature wasn’t publicized in quite the same way as Apple’s announcement, the review on popular camera review site dpreiview.com sums it up well “This high resolution screen really has to be seen to be appreciated, it’s beautifully detailed and extremely smooth in appearance because the tiny gaps between dots are too small to be seen with the eye.”

This high resolution screen really has to be seen to be appreciated, it’s beautifully detailed and extremely smooth in appearance because the tiny gaps between dots are too small to be seen with the eye.

Such a quote got me thinking. A lot of TV manufacturers (or at least their publicity departments) like to talk about fantastic new “future proof” standards. Obviously, it is silly to even believe that you can make anything future-proof, and especially in this current age where technology becomes obsolete so quickly, it seems silly to even use it as a selling point. But they do, and it works. I thought about future proofing and it made me think about laptop computers. When I was in high school, your laptop could become obsolete in a matter of months. Within a few months, a newer laptop at a comparable price point would perform significantly faster than the one you had, and by “significantly faster” I mean it in a way where even people who were totally computer illiterate would notice. Within two years, your laptop would be practically unusable. Nowadays, this trend seems to be slowing significantly. A laptop that I purchased three years ago, while a little sluggish, is still perfectly usable, and considerations like size and weight have become more important than performance, hence the rise of the very cute “netbook” laptops. Why has this happened? I think it has to do with decreasing returns to scale, and the nature of how we use computers.

The vast majority of people in the world use computers for word processing, managing spreadsheets, and communicating via the internet. None of these tasks requires a huge amount of computing power. Even people who spend a lot of their computer time playing games have slowed down somewhat in their quest for ever-faster processors as the advent of hard-wired graphics-specific processors (graphics cards) can give them more bang for their buck. High-end games, and high-end photo processing and video processing are really the only applications left which really continue to push a computer’s computing power. For the majority of us, the technology has matured and reached a point where more powerful computers won’t contribute significantly to making the user experience better – and indeed resources are now being diverted to other aspects such as the user interface both in hardware and software, where the improvements would more significantly enhance the user experience.

Does that mean that current computer processors are future-proof for most of the population? Not really. New screens, new methods of interaction like voice and touchscreen will likely require faster processors to render the integration of these solutions seamlessly, and there will always be those higher-end users whose work legitimately requires the fastest processors that can be made (weather forecasters, special effects studios, and people who try to find the largest prime numbers). I was wondering if the same can be said for screen resolution. Obviously, there will always be a need for higher and higher resolution screens and capture for highly specialized applications, but for most of the population, there probably is a reasonable “specification” after which most wouldn’t know the difference.

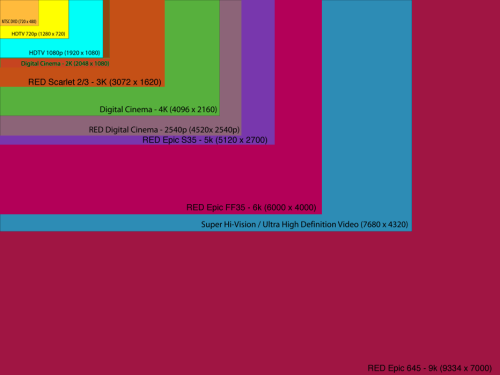

When asked about why Inception wasn’t filmed in 3D, producer-writer-director Chris Nolan replied that shooting in digital would have meant what was, to him, an unacceptable compromise in quality. The “Fusion Camera System” that James Cameron developed and used for Avatar is basically two Sony CineAlta cameras bolted together to film a stereoscopic image. Those cameras contain 2/3″ image sensors of approximately 2 megapixels each. Compare that with the resolution of 35mm film which is approximately 24 megapixels (for a 135-spec imaging area i.e. a still photograph), and you can see what Nolan was getting at.

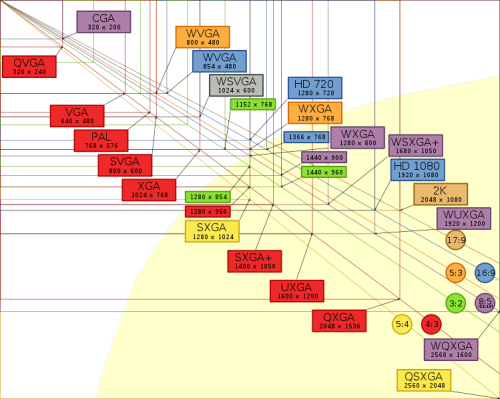

Of course, 1080p high definition is the highest resolution that any modern TV can reproduce. The only Reason you would ever need to shoot in higher resolutions than that is if you intend the image to be blown up to the size of a movie screen, or possibly even an IMAX screen. Eventually however, we can reasonably expect TV technology to improve to the point where higher resolutions are possible. (of course, bandwidth would have to increase significantly to allow for that amount of data throughput, as would data storage to be able to record it). How can we possibly determine the theoretical limit of the quest for ever-higher resolution?

The easy answer to this would lie in the human eye. There would presumably be a point, past which any increases in resolution would be imperceptible. By definition, what is known as 20/20 vision or “normal” visual acuity corresponds to being able to distinguish line pairs (telling the difference between two skinny lines and one slightly thicker one) at about one minute of arc. That means that if you draw a line from each of those two lines to your eye, and measure the angle between those them, that angle would be a 60th of a degree. The maximum possible visual acuity for a human eye (limited by diffraction) is, in these terms, about 0.4 of a minute of arc (for our eyes to have better resolving power, our species would need to evolve bigger eyes).1 How does this translate into the resolution of a screen?

Say I’m sitting three meters (just under ten feet) from a TV screen. Say that TV is about 106cm diagonally across (about 42″). If it is a standard example of 16:9 ratio widescreen TV, then that gives the dimensions of the screen to be approximately 92.4cm by 52cm. A little bit of rough/basic trigonometry (it’s ![]() if you must know) reveals the vertical angle of view to be about 9.5 degrees. That’s 570 arc minutes (which is about the resolution of standard PAL TV!), which comes to about 1425 lots of 0.4 arc minutes if you happen to have theoretically-optimal eyesight. This probably means that people sit far too close to their televisions. In any case, this means that 1080p is over and above the average resolution of the human eye, if you happen to sit 3m away from your 42″ widescreen TV. If you sit a meter away, then you’ll need at least 1600 vertical lines of resolution for you not to distinguish pixels.

if you must know) reveals the vertical angle of view to be about 9.5 degrees. That’s 570 arc minutes (which is about the resolution of standard PAL TV!), which comes to about 1425 lots of 0.4 arc minutes if you happen to have theoretically-optimal eyesight. This probably means that people sit far too close to their televisions. In any case, this means that 1080p is over and above the average resolution of the human eye, if you happen to sit 3m away from your 42″ widescreen TV. If you sit a meter away, then you’ll need at least 1600 vertical lines of resolution for you not to distinguish pixels.

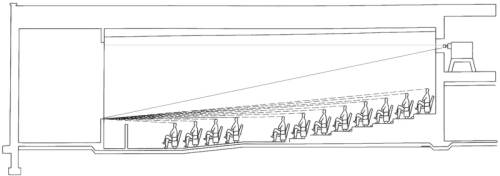

In the cinema however, the screen takes up a much greater angle of view (especially if you sit in the front row of an IMAX cinema, like I did once). In the illustration, even if you happen to sit in the front row, you’re probably looking at no more than a 45 degree angle of view (and IMAX cinemas are similarly arranged to accommodate the larger screen. This ends up needing about 2700 lines of vertical resolution. Luckily the resolution of film is about 24 megapixels, or about 6000×4000 pixels, and at this point, the quality of lenses and the accuracy of focus become far more important factors in considering the quality of the image.

An interesting side note: almost all films these days are recorded on what is known as “anamorphic format” which, in technical sense is where each frame is four perforations tall and has an aspect ratio of 1:1.37 to allow for sound tracks in the image area. The standard aspect ratio of motion pictures is 1:2.39 so to achieve this special (very expensive) lenses are used for for filming and projection. The 24 megapixel resolution of film I often quote is from the still photography format which is about 7.5 perforations long and 36x24mm. Strictly speaking, since the area of film being used by motion pictures is 21.95×18.6mm, the corresponding resolution ends up being about 3660x3100pixels (11.3 megapixels) or 3100 lines of vertical resolution. Consider also that the aspect ratio “stretches” the image sideways to achieve the 1:2.39 aspect ratio, and you’re spreading those 3660 pixels over an even greater area (this is also why lens flare in cinematic productions is always oval-shaped). This all results in the resolution of film in the context of a movie cinema to be slightly higher than what is discernible by the human eye in the vertical direction, but slightly lower in the horizontal direction, at least if you sit in the front row. I guess the take-home lesson from this, is don’t sit in the front row of movie cinemas.

So, we now have a general idea for theoretical maximum useful resolutions for everyday things like movie cinemas and televisions and know that the number is a lot less important than the actual angle of view per pixel. In reality those maximums are probably a bit lower because viewing conditions (and our eyes themselves) are not always optimal. For example, when it is dark (like at the movies) your visual acuity is slightly lessened because your pupils are maximally dilated to try to let more light in. We can always blow something up onto a large enough screen and then walk towards it until the angle of view represented by each pixel is large enough for our eye’s resolving power to “beat” the resolution of the image. But how about small screens like the D300 mentioned above, and the 4th-generation iPhone?

By self-experiment, I have determined the minimum focusing distance for the human eye to be about 7cm. This is, by no means a definitive experiment (especially since the sample size was 1) but I would imagine that the real figure to be not far off. (If I had removed my contact lenses, that distance is almost certainly shorter, but that would be cheating). So, let’s do the calculations for the iPhone screen (seeing as it’s slightly bigger than the screen in the back of a DSLR). The screen is about 73.97mm x 49.31mm so… given a 70mm minimum focusing distance for the eye, gives a vertical angle-of-view of about 47 degrees requiring a resolution of about 2800 pixels, well short of the 960 vertical pixels of the iPhone. Of course, nobody reads their phone at 70mm from their eyes in normal everyday use, and indeed Apple’s own claim is that the screen exceeds the eye’s resolving power when held at 300mm from the eye. Based on the 20/20 vision definition of the eye’s resolving power, the phone would need a pixel count of greater than 788, which it satisfies, although if you happen to have slightly better than 20/20 vision, then you may need more pixels. It is, in any case, quite close.

Taking the 70mm minimum into consideration, the minimum pixel size resolvable by the human eye would be 0.02 of a milimeter (about 20 microns – or a fifth the width of a human hair, which explains why we can distinguish single strands of hair). Let’s say I wanted my 15″ Macbook Pro’s screen to have the maximum useful resolution to the human eye according to that – then it’s screen resolution would have to be 16500×10300, if we go by Apple’s iPhone resolution, then it would only need to be 4240×2650 (for reference, its current resolution is 1680×1050, and for the record I can see pixelation from my normal seated position of about 400mm away from the screen, but it really doesn’t bother me). This knowledge can also give us an indication of the relevance of megapixels in digital cameras – for a 15x10cm print, 1930×1280 pixels (about 2.5 megapixels) is enough, and for a 30x20cm print 3860×2560 (about 10 megapixels) should do the trick. Keeping in mind that an A4-sized print is actually quite large, and the vast majority of digital camera photos these days never make the trip from a hard drive or the internet into the real world, it’s a wonder that compact camera resolutions didn’t stop at 6 megapixels to concentrate on all the other stuff that contributes to image quality.

Of course resolution isn’t everything, and things like frame-rate (“optimal” frame rate is a much more complex question than resolution), shutter speed, and the fineness of colour gradation probably have a greater impact on how well-perceived an image may be. Not only that, but the quality of the optics, both for capture and projection will also come under closer and closer scrutiny as the resolving power of sensors increases (the above reasons are why a photo from a 12 megapixel compact camera suck when compared to a 12 megapixel photo from a DSLR). The resolution race still has a way to go, but the finish light at least seems to be in sight. The second Star Wars prequel was one of the first major films shot entirely in digital, and even though it sucked the fat one, it demonstrated that 2 megapixels, despite being a far cry from film resolution, was quite passable for most (remember Slumdog Millionaire? That was shot in digital with an SI-2k, whose resolution is only slightly greater than the technology of the Star Wars prequel). With the RED Scarlet and Epic (which can shoot 6000×4000, or 24 megapixels) just on the horizon, we’re finally going to be able to leave the resolution race behind and concentrate on more meaningful aspects of image reproduction.

- it is also important to note that this is the resolution of the center-portion of our visual field, it gets much worse towards the edges quite quickly ↩

This is very interesting. I won’t do your calcs again, but thank you. I think you could clarify – or extrapolate using trends you’ve identified – when various devices/media will fully outstrip eye-resolving power. Then we can take bets on that.

There’s however one crucial thing you’ve missed. You are making two very dangerous assumptions in eye-resolving power, namely that:

a) the visual cognition engine (let’s call this the VCE, and by that I mean the eyeball-visual cortex-cognition machinery package) has no post-processing capacity

b) the VCE is a merely a syntactic engine (i.e. it is content agnostic, just processes input signals, has no ‘semantic’ power).

I’ll clarify both points.

On the first point, what happens in so-called digital zoom of a digital camera is that the image is ‘enhanced’ in various ways conditional on the power of the on-board processor and sophistication of its algorithms. Evidence suggests that this is a huge piece, by analogy, of the human VCE. I.e. that, post-signal, further acuity is achieved by sheer, ‘bit-level’, corrections and supplements, once the eye has captured an image.

A crude example is font-smoothing on standard computer screens: the sub-pixel colour rendering doesn’t make the font curves any less pixellated, but it does prompt the mind to ‘do the rest’ and ‘see’ a smooth curve, rather than a pixellated curve. The ways that such post-processing can be achieved, or prompted, is really not known. Thus ‘eye resolution’ and ‘acuity’ are not mere signal-input and raw signal-processing related issues, as you have presented them.

On the second point, this is even more interesting. Most visual information that humans actually process has a huge semantic content, and the VCE is massively attuned to seeking out the core semantic content fast, and ignoring the rest. The studies of eye-motion, non-autists vs autists, play this out: autists do not necessarily focus on facial features, because they are not necessary seeking to determine the emotional status of an agent a priori; natively, non-autists do this as a primary visual task.

This is to say, human visual perception is does not absorb an entire ‘frames’ worth of information at full resolution, and then somehow parse it out to its semantic components: it rushes to focus on key semantic elements, chiefly human facial and body language, movement, key colours, and then builds up resolution around that, only building resolution further afield if time and key-object status permits.

This is crucial to understand ‘theoretical maximum acuity’ (TMA) of the VCE. Because, signal-wise, we get straight into the realm of how well-signalled key /semantic/ content is: i.e. how intelligible what the VCE /thinks/ should be clear is semantically. If, for example, a digital face is presented on screen in TMA, but presents strange features – e.g. apparent distortions of shape from normal – it seems like the VCE will call out ‘low acuity!’ and seek further data, not of a /syntactic/ (i.e. raw visual signals) but /semantic/ type.

Note that while syntax-level processing is /post/-processing, i.e. post-signal-input, the semantic layer seems to be both pre-and post: if you show a shot of a doorway, the VCE is hovered on the doorway waiting for someone to enter (pre-processing), and then, once someone has entered trying to work what their body, face, movements are signalling about the situation (post-processing).

The point is that the mind calls ‘acuity’ when it /believes/ it sees /someTHING/. It does not call acuity when it sees unrecognisable and semantically opaque objects delivered in TMA. I’m prepared to bet you could experiment, and present images of huge semantic clarity (using some control tricks to get the mind to believe it was viewing in high resolution) with pixellated, low-res syntactic character, and the mind can be tricked into thinking it’s high res. And vice versa.

So, those were my main points: syntactic-level post-processing and semantic-level pre-and-post-processing, as a feature of determining the TMA of the VCE. Yum.

Here’s my bet, for what it’s worth. I think there are higher orders of visual signal processing, in the way that I believe there are higher orders of audio signal processing, and one day (later, rather than sooner) we will be able to exploit these.

We are told that MP3 is ‘lossless’ compression, to the extent that it strips out ‘inaudible signals’. I seriously doubt whether higher harmonics, which individually are beyond the syntactic capacity of the ear to hear, are actually beyond the ACE (auditory cognitive engine), in context, in particular when audio-semantic context pushes the ACE to seek, unconsciously, such features of the signal.

Thus, I believe that the VCE can detect ‘higher-order’ signals, in particular in semantic contexts, and indeed that it does this. For example, I suspect that, IRL, the VCE is over-processing, i.e. generating far higher visual acuity than the TMA would permit, for example, facial images. I think films sooner or later will get with this: and will deliver semantically-variable resolution in each image-frame. I.e. images much higher than TMA will be rendered (delivered dynamically possibly in-camera, or left in situ in post-process compression) for, e.g. faces, in the films of the not-so-near, optimal acuity film future.

Just guessin’.

And I believed the same

You’re absolutely right – there’s a LOT more to seeing than your standard “computer definition” of it; that is, simply being able to render pixels. Our brains are hard-wired for pattern recognition and the patterns it’s good at recognizing are determined largely by our evolution.

There was a very interesting article I read a few months back about a guy whose visual cortex was damaged in a car crash, so he couldn’t consciously see. BUT everything was intact – eyes, optic nerves etc. After a while, it was discovered that he could “see” on a subconscious level. Like… he couldn’t form an image in his mind, but he could walk through a maze without bumping into things no problem because he could “sense” where things were (and if he closed his eyes, then he’d start bumping into things).

This more or less proves your point about detecting and interpreting higher order signals, or even “lower” order signals but in areas of our subconscious. I’ve actually been pretty certain for a long time that the “lossless” compression (I really wish that PR departments would stick to strict mathematical definitions) of MP3s actually loses a lot of information that is perceptible, even at a conscious level, to our ears, which is probably why, as hard drive space becomes cheaper and cheaper, people are encoding their MP3s at higher and higher bitrates. It won’t be long until they effectively are lossless and the files are only marginally smaller than the original rips.

In digital image processing, and to some extent film making, a lot of image processing already goes on to trick our eyes into seeing more detail than there really is. I’m not a huge fan of this, but that’s mostly because it is often overused and for some reason I spot this stuff easily (oversharpening artifacts especially). Actually, with regards to the question of framerates, this comes up a lot, because the technology exists to render hundreds of frames per seconds of perfectly focused, crisp motion… but that’s just not how our eyes see things. We can identify a still image after 1/200 of a second, sometimes shorter depending on the individual, but when things move, we see motion blur, and without it we reject the image as fake.

This is also why film makers are always after super wide aperture lenses. They don’t need to freeze motion at 1/4000 of a second like a sports photographer with the same lens would. They need that shallow depth of field because, when we look at something, our eyes focus on it and everything else becomes a bit blurry, or sometimes very blurry; even if everything is in the same plane of focus. Still photographers use this to draw our eyes to certain parts of an image while motion picture photographers use it to stop their scenes from being rejected as fake by our eyes.

What I’m more afraid of in the future is subliminal messaging hidden in the higher-order layers of an image. Rather like the way survey questions can influence the result slightly by framing the question in certain ways, or by using certain “loaded” words. Someday this will probably be achievable with an image. I think it already happens to an extent with sound, and actually with sound, there’s potential for a lot more information flow.

It would be much harder to determine an upper-bound for information flow in the VCE though, because it would vary from person to person. When I was getting an eye test, with my contacts in, I could read the chart all the way down to one level after 20/20, but I could identify the letters correctly for the next level too, even though I couldn’t really see them. That’s probably because I spend far too much time looking at unprocessed digital photos VERY close up and have become used to determining shapes and text from fuzzballs. I’m not so sure than a less-experienced person with similar visual acuity would do so well on that test.