Musings on Torrents

Torrents are wonderful things. For those who are unfamiliar (and it is entirely possible to be unfamiliar yet still be a regular user), torrents are a system by which files are shared over a network of computers. If the Internet is the era-defining black swan of our generation, then torrents are the cargo trucks on our superhighway. They are the natural evolution of the solution to the problem of moving files from place to place.

First, a bit of context and technical background. Back in the good old days when the Internet was just starting up and a gigabyte was considered huge for a hard disk, bandwidth was very limited. Most people connected via modems using telephone lines with carrier signals that sounded like faxes. To send large files from computer to computer was difficult, HTTP (or hyper text transfer protocol) was limited in this capacity and FTP (file transfer protocol) was clumsy and often required the swapping of passwords and connections between trusted computers. This basically meant that you had to “know” in some sense, the person with whom you exchanged files, or else download files over slow and unreliable connections.

Then came P2P, or peer-to-peer networks. You’ve probably heard of Napster, which was one of the first widely-used P2P networks. The nature of P2P is exactly what it sounds like. In the case of Napster, the tracking of clients was still centralized, but once a request for a file and a computer with that file had been identified, then these two computers would be able to connect to each other and the file transfer could take place. Although Napster was eventually shut down, it paved the way for other P2P systems such as gnutella, which are decentralized and allow downloading of a single file from multiple hosts who also have that file, further speeding up the spread of data.

Then came bit torrent. In a technical sense, the whole mechanism for how torrents works fascinates me. With something like the gnutella network, you can download a file from as many different computers as have the file, which is great, and can be very fast although… think about what happens when a file first hits the net.

One person has the file, great. Lets say you’ve got a network of 10 computers who all want the file. The other nine start downloading the file from this one dude so the this one guy’s network connection gets maxed out until everyone else has the file. We can make this interesting and say that it takes one hour to transfer this file from one computer to another (to define our bandwidth), and so it will take nine hours (because the first computer’s bandwidth is split into nine) to transfer this file from one computer to the other nine simultaneously. You could be very clever about it, and transfer it to three computers first (taking three hours) then having those four computers with a complete copy of the file transfer it on to the remaining six (taking one and a half hours) for a total of four and a half hours. Actually, given the above constraints, the quickest way of doing it is for the first host to simply download to one other host (one hour) then for those two to download to one each (one more hour) then for those four to download to the remaining six (one and a half hours) for a total of three and a half hours.

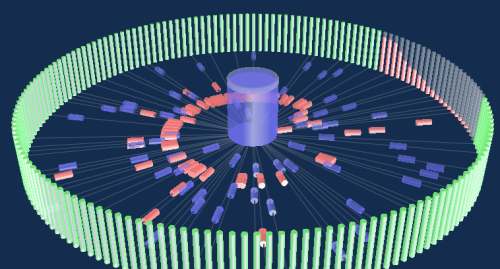

As you can see, the main difficulty is that a computer needs a complete copy of the file before it can begin to give it away to another computer. In the case of very large files, this can become problematic, especially if the network has a lot of computers and connections between any two are not always reliable. This is where torrenting comes in. In a torrent, a file is split into many different, very small pieces, and “seeded” to the “swarm”. The real kicker with a torrent is that a computer may begin to upload different pieces of a file to other computers before it has completely downloaded the original file. It seems like a very small adjustment to the original parameters, but the implications are mind-boggling. Reconsider our original situation.

The original file host splits the file into nine pieces and distributes a different piece each to the other nine computers (taking only an hour). Each of the nine computers sends their “ninth” to the eight other computers in the network (taking possibly a little less than an hour) and we’re done. But it gets better – because you don’t have to wait until you have the full copy of a file before you can send bits of it onwards, consider what happens when the pieces are smaller. Say the original host splits the file into thirty six pieces and distributes the first nine to the nine other computers during the first fifteen minutes, in the next fifteen minutes, the next nine pieces are distributed from the original host AND all of the first nine pieces are distributed among the nine. So, fifteen minutes after the first hour (the time it takes for the host to fully upload all the pieces of the original file). It is easy to see that, the smaller the pieces you split the file into, the shorter the amount of time after the initial download it takes before all the computers in the network have their own complete copy of the file. Another beautiful thing about this system is that once a few full downloads have been completed, especially on very large networks, computers can drop out and come back with minimal disruption to the whole deal whereas before, the dropping out of a computer with the complete file would slow things down considerably.

There’s something nice about the way this all works. You could, in theory, have a very large network in which no one computer has the complete file yet still be able to complete the download from the fragments.

Now most bit torrent programs keep a record of your upload/download ratio, and some people won’t let you download from them unless your ratio is above a certain number. I was thinking about this the other day, and came to the conclusion that these demands were, in theory, mathematically unreasonable to ask of anyone. In the above example, the original host would have uploaded one complete file and downloaded none, while the other nine would have downloaded one complete file and uploaded a ninth of one. Of course, they uploaded that ninth eight times giving a total of eight uploads between the nine of them and nine downloads of course. The ratio for the downloaders is ![]() and the total “global” (for a very small globe of ten computers) ratio is therefore one-to-one.

and the total “global” (for a very small globe of ten computers) ratio is therefore one-to-one.

This is, of course, an average. As the number of computers (let’s call it n) approaches infinity, the ratio for the downloading computers approaches ![]() . But in the real world, these aren’t closed systems, some people stay on the network and continue to seed packets to computers with incomplete files, while others bugger off as soon as their download is complete. If we assume that everyone eventually has complete copies of the files being distributed (which is one of the only reasonable assumptions we can make here) then the global ratio has to be 1:1. If you’re one of the system admins out there who leaves their computers on the network all the time and basically seeds files forever, you’re going to have a huge upload-to-download ratio. If you then insist that anyone who downloads from you has a ratio of at least one-to-one, then you create a small problem – where is everyone else going to get the high ratio from? If the global average is one, then, given that these large superseeders exist, getting a ratio of one would depend on there being many people out there with very low ratios indeed, which is probably not what these sysadmins are trying to promote with these ratio restrictions.

. But in the real world, these aren’t closed systems, some people stay on the network and continue to seed packets to computers with incomplete files, while others bugger off as soon as their download is complete. If we assume that everyone eventually has complete copies of the files being distributed (which is one of the only reasonable assumptions we can make here) then the global ratio has to be 1:1. If you’re one of the system admins out there who leaves their computers on the network all the time and basically seeds files forever, you’re going to have a huge upload-to-download ratio. If you then insist that anyone who downloads from you has a ratio of at least one-to-one, then you create a small problem – where is everyone else going to get the high ratio from? If the global average is one, then, given that these large superseeders exist, getting a ratio of one would depend on there being many people out there with very low ratios indeed, which is probably not what these sysadmins are trying to promote with these ratio restrictions.

The clever among you may have already spotted a way out – continuous growth. If the network constantly expands, then it is possible for everyone to eventually have access to file hosts who stipulate a ratio greater than or equal to one. At the moment, this seems plausible, but obviously there are eventual limits to the size of the network. Eventually, users will probably be forced to set up “sockpuppets”1 in order to increase the users’ ratios, which is counter-productive because the whole idea of coming up with the system was to more-efficiently use system resources.

I guess this rant probably wasn’t what you were expecting. I suspect that most were expecting some kind of philosophical rambling about intellectual property and copyright and the evils of the RIAA. Instead, you got a technical description of how torrents work which you may or (more likely) may not be interested in. Oh well. Access to information and the rules regarding intellectual property are thing that I care deeply about, so I’m sure I will eventually go on a very long rant about it… maybe next time.

- A sockpuppet is a phony account created by a user to give the illusion of there being two users ↩

Leave a comment